Visitors interacting with Ameca the robot at the AI for Good Global Summit, UN Photo / Elma Okic

Visitors interacting with Ameca the robot at the AI for Good Global Summit, UN Photo / Elma Okic

Biden’s AI Safety Plan: Bureaucracy in the Age of Artificial Intelligence

Amidst the global buzz spurred by this week’s global AI Safety Summit, one new piece of legislation regulating artificial intelligence is at the forefront. Within minutes of the Biden Administration’s “landmark” executive order on Monday, news and media figures descended on the document. Whether it’s the most comprehensive effort yet to regulate powerful artificial intelligence technologies or ill-informed rulemaking undermining intra-government cooperation, the order has been labeled as both too far and not far enough in the quest to tackle the most significant threats posed by AI.

If the stakes seem high, that’s because they are. This morning’s Bletchley Declaration highlighted AI’s ‘catastrophic’ danger, reflecting earlier warnings of the “risk of extinction from AI” signed off by Bill Gates and OpenAI CEO Sam Altman, plus Elon Musk’s concerns over AI’s potential for “civilization destruction.” If artificial intelligence becomes a staple of combat as rapidly as it has become a staple of commerce, the internet, and transportation over the past few years, experts say it will soon revolutionize warfare and even expedite a shift in the global balance of power. Under this impending artificial intelligence arms race, technological first-movers gain an advantage over large governments, a threat no nation should take lightly.

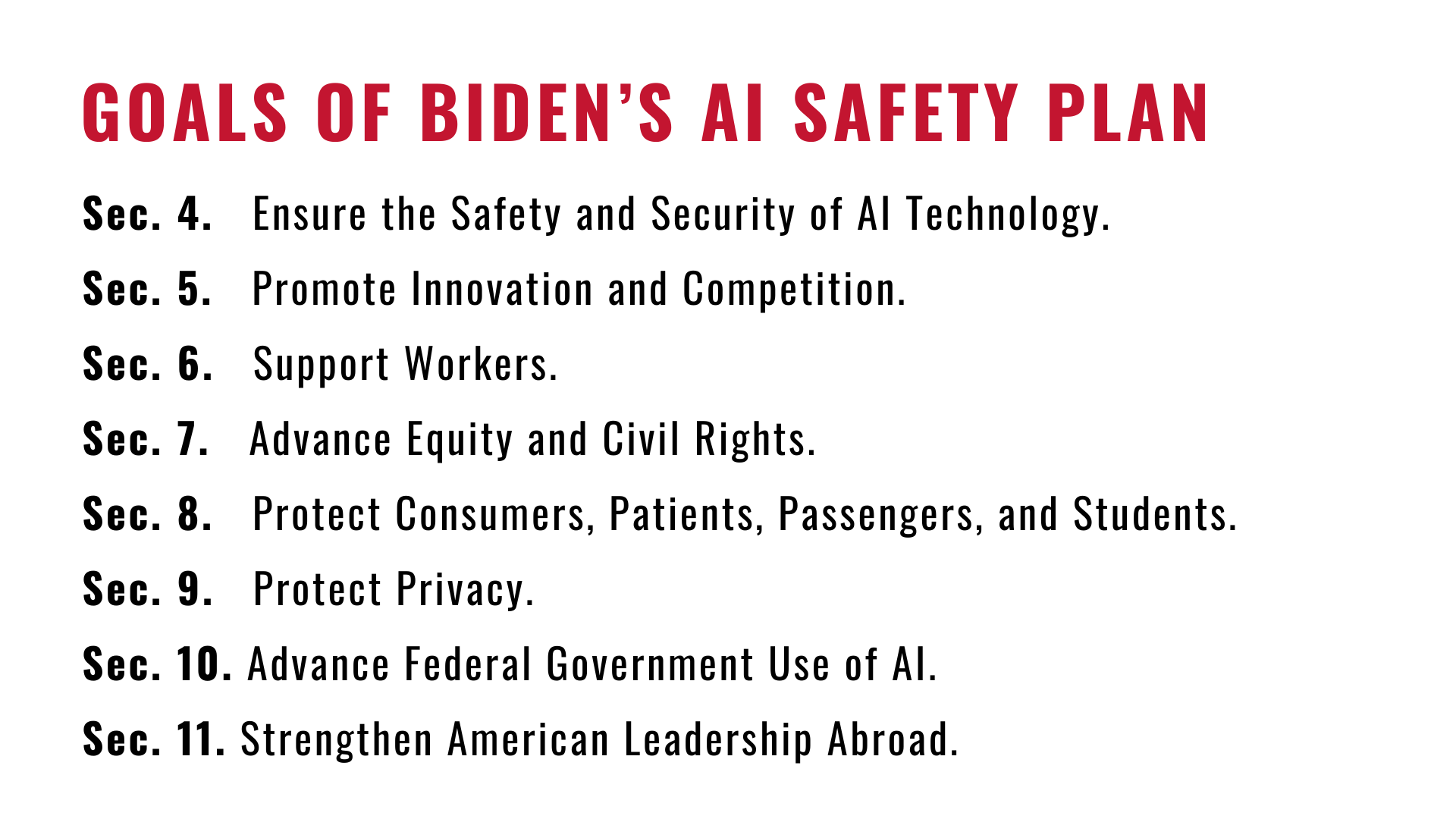

Amidst this apocalyptic rhetoric, the Biden administration’s order fights back with bureaucracy. The document delegates previously stated goals rather than introducing new ones, institutionalizes artificial intelligence development standards, and specifies oversight mechanisms across several offices and agencies. Eight policy sections highlight major AI risks and lay deadlines for agencies to craft targeted plans to prevent the worst-case outcomes.

By focusing on the logistics rather than the policy prescriptions themselves, the success of Biden’s AI safety plan will depend on several newly introduced experts and task forces. With lofty goals such as ensuring fair use of AI throughout the criminal justice system, transforming AI education, and “building an equitable clean energy economy for the future,” these subsidiaries are expected to work in tandem while “taking into account the views of other agencies, industry, members of academia, civil society, labor unions, international allies and partners, and other relevant organizations.” This means that the policies that ultimately determine whether the United States will remain the global leader in AI will be decided not by the executive branch but by groups of experts tasked with developing guardrails and oversight mechanisms down the line, plus whatever other entities they consult with in the process.

While critics argue that shuffling AI regulation onto other agencies is kicking the can down the road, targeted and decentralized oversight of AI development may be the best-case scenario until the broader implications of the technology are better understood. Better yet, the density and specificity of the order indicate that federal discourse surrounding artificial intelligence may finally be maturing beyond doom and gloom rhetoric. After all, while agreements like the Bletchley Declaration can smooth international tensions stoked by the code war between the U.S. and China, these agreements do little to prevent bad actors from operating on the local and national levels. Executive authorization is necessary to criminalize and penalize AI misdeeds—and open the door for funding to make higher-level goals happen.

Due to a combination of robust expectations, lack of specificity, and good timing, the positive response from key regulators and industry leaders to Biden’s Safe AI Order greatly outweighs the negative. Despite this, America’s leadership in responsible and competitive AI is far from secure. The hardest and most important mechanism to bring on board is Congress; most of the initiatives contained in the order rely on funding and support from legislators to be effective, yet Congress has been historically reticent to pass federal legislation regarding critical technologies. Representatives like Senate Majority Leader Chuck Schumer have recently come around to the idea—partly thanks to sustained discourse with big tech representatives in ongoing hearings and hill meetings. It remains yet to be seen, however, whether and how much Congress will authorize to combat the looming threat.

In the complex landscape of artificial intelligence, the Biden Administration’s Safe AI Order serves as a pivotal—if not emotionally underwhelming—milestone. It addresses the pressing need for regulatory guidance while giving experts the time and authority to craft industry-specific regulations. As policymakers look beyond doomsday scenarios, practical measures to ensure responsible development are critical to maintaining America’s ongoing leadership in AI. As the world grapples with the technology’s transformative potential, only time will tell whether the order will be a catalyst for ethical AI innovation or an idealistic aspiration against an unready bureaucracy.